Demystifying Rate Limiting: A Comprehensive Guide

how many requests do I have left?

You might have seen that behaviour when remembering your smartphone PIN or password. You make a couple of attempts to put in the correct PIN, and at some point, you try again. You see a message like: "You've entered an incorrect password multiple times, please wait for 1 hour and try again," or you experience a disruption in using a service with a message that says: "Fair usage limit exceeded"?

They can be rather frustrating, right? Well, you just experienced an application of Rate Limiting.

In this piece, we are going on a deep dive into the nitty gritty of rate limiting, rate limiting algorithms, and one part that's not spoken about enough; the impact of rate limiting on business and product.

It's a comprehensive guide; so here's some popcorn to see you through 🍿

Understanding Rate Limiting

Rate limiting is a mechanism used to control the rate at which clients or users can make requests to a server, API, or any resource. It is crucial in preventing abuse, ensuring resource availability, and maintaining system performance.

One of the key purposes of rate limiting is to keep chaos at bay:

Imagine a world without rate limiting. In this scenario, any client, whether well-intentioned or malicious, could bombard a server with an endless stream of requests. This overload could cripple the server, rendering it unresponsive and frustrating legitimate users.

Rate limiting works like a digital safety net and contributes to application and network security. It's like a virtual bouncer at the door of a nightclub, ensuring that everyone gets a fair chance to enter while preventing rowdy behaviour.

Why is Rate Limiting Important?

Preventing Abuse and Overload: Rate limiting is the first line of defence against abuse and overload. It prevents clients from flooding servers with requests and causing system-wide havoc.

Resource Allocation and Fairness: Rate Limiting guarantees that each client gets a reasonable share of resources, fostering an environment of fairness and cooperation among users.

Upholding System Stability: Rate limiting maintains order when software systems generate unpredictable digital traffic. It ensures that servers don't get overwhelmed by sudden spikes in requests, resulting in a stable and reliable system.

Security and Resilience: Rate limiting is a powerful ally in cybersecurity. It makes it harder for attackers to launch brute-force or denial-of-service attacks, enhancing system security and resilience.

Economical Resource Utilization: It's not just about performance; it's also about efficiency. Rate limiting prevents unnecessary resource consumption, helping organizations optimize infrastructure costs and reduce energy usage.

At its core, rate limiting shapes the user experience. When implemented effectively, it ensures that users enjoy reliable and secure access to services, leading to happier customers and business success.

That said, Let's get into the details!

How does Rate Limiting Work?

Rate limits are controlled by the developers and/or admin and are set within the configurations of a system or a simple API. The admin might configure the rate limit mechanism to track two main factors:

The IP address of the users making the requests

The time difference between each request.

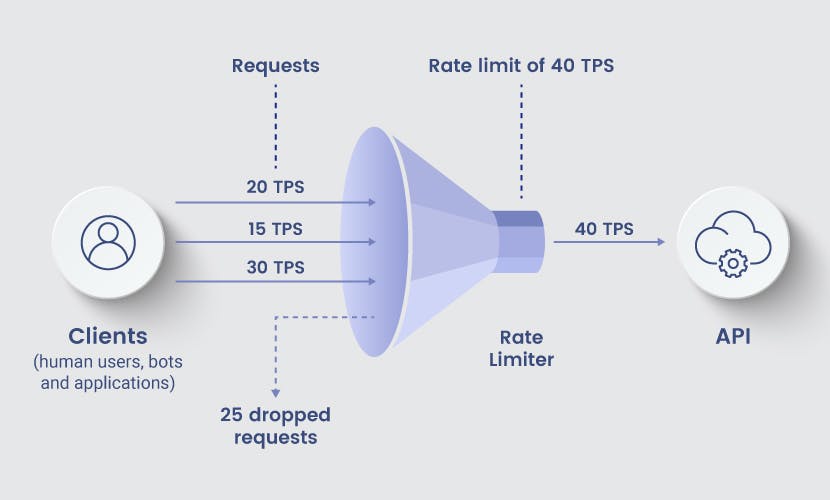

Rate Limiting throttles request by tracking and comparing each request's Transaction Per Second (TPS).

If a singular IP address initiates an excessive number of requests within a defined timeframe, surpassing its designated TPS limit, rate limiting intervenes by suspending the server or API's response.

When a request arrives, the server checks if it exceeds the defined rate limit. If the request rate is within limits, it is processed; otherwise, the server takes appropriate action, often returning an HTTP 429 status code (Too Many Requests).

Image Credit: https://phoenixnap.com/

The process of deliberately regulating the flow of incoming requests to ensure that they adhere to predetermined rate limits is called throttling.

Throttle Process of Rate Limiting:

Defining Rate Limits: Rate limits are established based on specific criteria such as the number of requests per second, minute, or any other time unit. These limits define the maximum allowable request rate.

Tracking Request Rate: To enforce rate limits, the system continuously monitors the rate at which requests arrive. This monitoring can be done using counters, timers, or other tracking mechanisms and algorithms. We will talk about rate limiting algorithms in the next section.

Throttle Decision: When a new request arrives, the rate-limiting system checks whether it would exceed the predefined rate limits if processed immediately. If the request exceeds the limits, the system enacts throttling measures.

Throttling Actions:

Delay: Throttling often involves introducing a delay or holding the request in a queue until it can be processed without violating the rate limit. This delay allows the system to slow down the request rate to an acceptable level.

Request Rejection: In some cases, throttling may result in rejecting requests that would otherwise cause the rate limits to be exceeded. These rejected requests typically receive an error response, such as an HTTP 429 (Too Many Requests) status code.

Rate Limiting Algorithms

Rate limiting relies on various tracking mechanisms and algorithms to monitor and enforce rate limits. These mechanisms and algorithms are designed to ensure that requests or actions are controlled within predefined limits.

Let's look at some of them:

1. Counter-Based Tracking:

Counter: A simple mechanism that counts the number of requests within a given time interval. When the count exceeds the rate limit, further requests are throttled or rejected.

Sliding Window Counters: These maintain a rolling count of requests within a sliding time window, allowing for more granular control over rate limits.

2. Token Bucket Algorithm:

Token Generation: Tokens are added to a virtual bucket at a fixed rate. Each request consumes a token. Requests can be processed if tokens are available; otherwise, they are delayed or rejected.

Token Expiration: Tokens can have a timestamp associated with them, and expired tokens are removed to ensure fairness and adherence to rate limits. This begs a question:

Are authentication tokens an application of Rate Limiting?

3. Leaky Bucket Algorithm:

- Bucket with a Leak: Requests are added to a bucket, but the bucket has a constant leak rate. Requests are processed as they arrive and are removed from the bucket. If the bucket overflows, requests are delayed or rejected.

4. Fixed Window Algorithm:

- Time Window: Requests are tracked within fixed time windows (e.g., one minute). When a window expires, the request count resets. Requests exceeding the limit within a window are throttled.

5. Rolling Window Algorithm:

- Sliding Time Window: Similar to fixed windows but with a sliding time window that moves forward in time as new requests arrive. This allows for more precise rate limiting over time.

6. Distributed Rate Limiting:

- Distributed Systems: In distributed environments, tracking mechanisms often involve distributed algorithms that synchronize rate limiting across multiple servers or nodes to ensure consistency and fairness.

7. Dynamic Rate Limiting:

- Adaptive Rate Limits: Some systems employ dynamic rate limiting, adjusting rate limits in real-time based on system health, load, or client behaviour. This can help prevent service degradation during traffic spikes.

8. Request Queuing and Delay: In cases of throttling, requests may be queued and delayed until they can be accommodated within the rate limits. Clients may experience delays but can eventually submit their requests.

9. Exponential Backoff: This is a client-side response to rate limiting. Clients that exceed rate limits may implement exponential backoff, where they wait for an increasingly longer time before retrying a request. This helps reduce the load on the server during congestion.

10. User-Specific Rate Limiting: Rate limiting can also be applied on a per-user or per-API-key basis, allowing for user-specific rate limits tailored to different levels of service.

I think this has been a ride so far. Let's refill your popcorn before we consider the last section in this piece. 🍿

Impact of Rate Limiting on Business and Product

Rate Limiting did not start with digital services or software products. The concept of restricting customer requests to allow fair usage has always existed in businesses that require such an approach.

As software products became more common, rate limiting has continued to have a significant impact on both the business and product aspects of companies that offer online services, APIs, or digital products.

The relationship between rate limiting and business/product can be understood in several key ways. Let's start by answering the question I raised earlier:

Are authentication tokens an application of Rate Limiting?

The answer is:

Authentication uses rate limiting to throttle the amount of time an authenticated user can continue to access a resource with their authenticated state. After that time is exceeded, the user is no longer recognized, hence, every other request is rejected until they re-authenticate themselves.

The authentication expiration part of the authentication process is an application of rate limiting.

Let's look at some other aspects of where rate limiting meets business and product.

1. User Experience:

Impact on Business: Rate limiting directly affects user experience. It ensures that users have a reliable and consistent experience when interacting with the product or service. Poorly managed rate limiting can lead to frustrated users, increased churn, and negative reviews.

Product Aspect: The product's success often hinges on the quality of the user experience. Rate limiting is a crucial component of ensuring a seamless and responsive user experience, which can lead to higher user retention and customer loyalty.

2. Scalability:

Impact on Business: Scalability is critical for businesses that want to accommodate growth in user numbers and traffic. Rate limiting helps manage the load on the infrastructure during periods of high demand, allowing the business to scale effectively without compromising performance.

Product Aspect: Scalability considerations are part of the product's design and architecture. A product that can handle increased user activity without degradation in performance is more attractive to customers and investors.

3. Security:

Impact on Business: Rate limiting plays a crucial role in security by mitigating the risk of abuse, such as DDoS attacks, brute force login attempts, or data scraping. Effective rate limiting helps protect sensitive user data and ensures the integrity of the service.

Product Aspect: Security is a feature that directly impacts a product's reputation and customer trust. Implementing rate limiting to thwart security threats is an essential product design consideration.

4. Fair Resource Allocation:

Impact on Business: Rate limiting ensures that resources are allocated fairly among all users or clients. This fairness can be a selling point for a product, as customers appreciate equitable access to resources.

Product Aspect: Fair resource allocation is an aspect of product design that contributes to customer satisfaction. A product that offers fair access to its features and services is more likely to gain positive reviews and recommendations.

5. Compliance and Regulation:

Impact on Business: Many industries and regions have specific regulations related to data handling, privacy, and service availability. Rate limiting can assist businesses in complying with these regulations, avoiding legal issues and fines.

Product Aspect: Compliance with regulatory requirements may be a product requirement. Rate limiting features can be designed and integrated into the product to facilitate compliance.

6. Monetization and Pricing Models:

Impact on Business: Rate limiting can be used as part of pricing models for products or services. For example, businesses may offer different rate limits or access tiers to customers based on their subscription level.

Product Aspect: Rate limiting can be a fundamental component of a product's pricing strategy, affecting how different customer segments are billed and what they can access.

What I am emphasising here is that rate limiting is not just a technical consideration; it has a profound impact on the success and perception of a business and its products.

TL;DR Summary

We've ventured into the fundamental principles and practical applications of Rate limiting. It's a digital guardian preventing chaos and serves as the backbone of system security and user experience. It also ensures fair resource allocation, upholds system stability, and optimizes resource utilization.

We've uncovered its role in controlling request rates, using tracking mechanisms, and implementing various algorithms, from counters to token buckets and distributed solutions.

However, the significance of rate limiting extends beyond technical aspects. Its impact on businesses and products is profound. Rate limiting shapes user experiences, fosters scalability, enhances security, ensures fairness, aids compliance, and influences monetization models.

Rate limiting is a technical safeguard and a strategic business tool, underpinning the success and trustworthiness of both services and products.